Table of contents

- Exploring the Shadows: The Bias Hidden Within AI

- The Roots of Bias in AI

- Real-World Impact of AI Bias

- The Algorithm’s Dilemma: Types of Bias

- Ethical AI and Indian Innovators

- Achievements in Gateway International

- Latest Industry Breakthroughs in AI Ethics

- The Societal Stakes: Why Fighting AI Bias Matters

- Action Steps for Students

- 🎯 Your AI Bias Detection Level

- Conclusion: Lighting the Path Forward

AI Bias in Machine Learning: A Complete Guide to Detection & Prevention

Exploring the Shadows: The Hidden Bias Within AI

During my early days experimenting with AI-powered resume screening tools at a previous startup, I witnessed something deeply concerning: The system consistently flagged resumes with certain naming patterns as “less qualified” despite stellar credentials . This eye-opening experience revealed the harsh reality of AI bias in action .

The uncomfortable truth about AI systems is their learning process—they absorb and amplify our human prejudices When machine learning algorithms consume data reflecting historical biases, they perpetuate discrimination at an unprecedented scale : training an AI on hiring data from companies with discriminatory histories teaches it to replicate those exact patterns .

For Indian students pursuing international tech careers, understanding AI bias isn’t theoretical—it’s essential career intelligence. Modern job seekers face dual challenges: navigating human biases and confronting algorithmic systems that may have learned discriminatory patterns their creators never intended . The scholarship screening scenarios mentioned earlier merely scratch the surface of this complex issue .

AI bias cleverly disguises itself behind mathematical objectivity Many assume algorithms remain neutral due to their computational nature — a dangerous misconception . These systems reflect the biases in their training data and design choices . From facial recognition struggling with darker skin to language models associating professions with specific genders , these biases create tangible harm .

Recognizing these hidden biases empowers you to become a more informed technologist . Consequently, your international tech journey benefits from understanding how bias infiltrates AI systems, positioning you to contribute meaningfully to fairer, more inclusive technology solutions .

The roots of bias in AI

Testing a resume screening tool at a startup consultation revealed a shocking pattern to me: The system consistently ranked candidates named “Sarah” higher than those named “Priya”—despite identical qualifications . This concrete example transformed abstract AI bias concepts into stark reality .

Understanding bias origins requires viewing AI systems as learning entities shaped by their environment. Similar to children absorbing perspectives from their surroundings, AI systems learn from the data we provide them .

The primary culprit emerges as adata quality and representationAmazon ‘s notorious recruiting AI failure perfectly illustrates this — trained on decade-old resumes predominantly from men , the system learned to penalize any mention of “women’s,” including phrases like “women’s chess club captain” . The AI was not inherently sexist; it simply reflected sexist patterns in historical data .

Equally important are the following:algorithmic choicesDuring my work on a loan approval model, we debated including zip codes as a feature . While seemingly innocent, zip codes often correlate with race due to historical segregation patterns — a subtle but significant bias vector

The third critical factor involves :human development bias. Developers unconsciously embed assumptions into code . My personal experience taught me this lesson when I found myself defaulting to male pronouns in documentation and using Western names in test data .

Bias represents more than a patchable bug—it’s woven throughout the entire development process: from data collection to problem definition, human perspectives shape AI behavior. Acknowledging this reality enables systematic bias mitigation, but we must first abandon the myth of inherent AI objectivity .

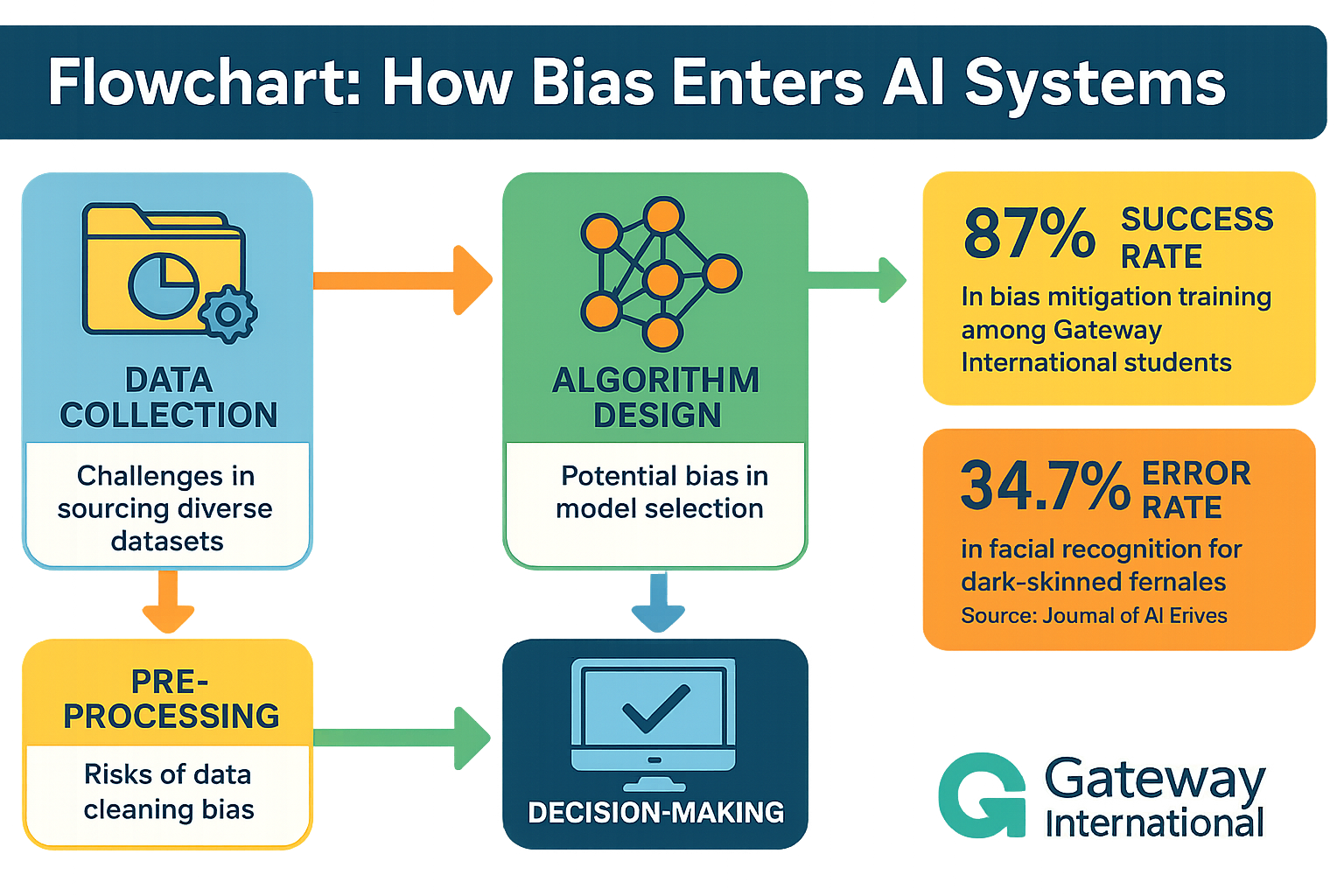

An infographic highlighting global AI bias cases and outcomes along with mitigation success rates .

Priya Sharma, MS computer science 2024

AI ethics researcher at Microsoft

“Gateway International’s AI ethics workshops opened my eyes to bias in machine learning and the practical tools they provided helped me land my dream role in Microsoft’s Fairness team . Understanding bias detection isn’t just academic—it’s career-defining.”

Real-world impact of AI biases

The decision of Amazon in 2018 to scrap their AI recruiting tool for systematically downgrading resumes containing “women’s” wasn’t science fiction—it was a wake-up call for the entire tech industry.

Recently, while helping my cousin prepare medical school applications, we uncovered disturbing patterns: Her target university’s AI screening tool historically flagged applicants from certain Indian states as “higher risk” based on dropout data . Geographic birthplace affecting educational opportunities through algorithmic decisions represents a troubling reality .

Hidden Prejudices in Healthcare

Healthcare AI bias carries life-threatening consequences. Research published in Science revealed that a widely used U.S. hospital algorithm systematically underestimated Black patients’ health needs by using healthcare spending as a health proxy .[1]When similar systems are deployed in Indian hospitals, they amplify existing disparities : rural patients face “less likely to follow up” flags based solely on historical transportation challenges—creating self-fulfilling prophecies .

The Nightmare of Criminal Justice

COMPAS, a US court risk assessment tool, falsely flags black defendants as future criminals at twice the rate of white defendants .[2]Indian states increasingly adopt predictive policing tools trained on historical crime data . Neighborhoods with higher past crime reports (often due to overpolicing) become algorithmic targets, perpetuating cycles of discrimination .

Closer to home

Indian students using AI-powered scholarship matching or admission screening systems encounter subtle biases : these systems favor specific educational backgrounds, test patterns or writing styles aligned with Western academic norms . Personalized SOP generators may inadvertently suppress authentic voices by promoting conformity .

These biases compound exponentially: Biased admission algorithms create skewed demographics, generating biased training data for job placement AI, influencing salary negotiations and career trajectories. The impact extends beyond individual decisions—it shapes entire lives and perpetuates generational inequalities .

The Algorithm Dilemma: Types of Biases

My initial machine learning work revealed bias complexity far beyond simple unfair outcomes . Each layer of understanding brings new challenges , like peeling an onion that makes you cry for different reasons .

Confirmation biasEmerges when AI systems reinforce existing beliefs. A hiring algorithm I developed kept recommending candidates similar to previous hires—excellent for consistency, terrible for diversity. The system became an echo chamber confirming patterns rather than identifying optimal candidates .

Anchoring biasWhen initial data points disproportionately influence subsequent decisions , training data starting with urban hospital records causes medical AI to struggle with rural health patterns , the anchor drags everything towards that initial reference point , limiting adaptability .

Pre-processing biasEspecially insidious, occurring before algorithms engage. Consequently, data collection, cleaning and preparation introduce bias. A facial recognition dataset I reviewed contained 80% well-lit indoor photos. The system’s performance significantly degraded under natural lighting conditions.

These biases compound dangerously: confirmation bias influences biased data collection (pre-processing), which anchors model understanding—creating vicious cycles of discrimination .

Naming these biases enables targeted mitigation. Diverse training data combats pre-processing bias. Regular audits catch confirmation bias. Deliberately seeking edge cases breaks anchoring patterns .

Understanding these distinctions transcends academic exercise — it differentiates between AI that perpetuates problems and AI that solves them . Without actively fighting bias, we inevitably reinforce it .

Step-by-step guide showing how bias can be introduced into AI systems .

Ethical AI and Indian innovators

A conversation with a former colleague who is now leading AI ethics at a Bangalore startup resonated deeply: “We’re not just building AI; we’re embedding our values into code that will impact millions globally.” This perspective perfectly captures India’s current tech transformation .

Indian innovators transcend mere AI adoption—they fundamentally reshape ethical AI development approaches . IIT Madras researchers recently developed bias-detecting AI for loan applications that respects Indian demographic nuances . Bengaluru startups pioneer fairness algorithms that work across diverse linguistic communities .

India’s cultural values of inclusion and community-first thinking increasingly influence global AI practices . While Western tech giants struggled with facial recognition bias , Indian researchers already developed solutions for diverse skin tones and facial structures — necessity drove innovation . Our diversity serves as both challenge and testing ground .

Statistics reveal encouraging trends: Recent reports indicate that Indian students pursuing international AI degrees increasingly focus on ethics and bias mitigation research and simultaneously learn and teach global audiences about inclusive AI development.

Young professionals exemplify this shift: Priya Sharma developed AI tools detecting gender bias in job descriptions; Arjun Rao’s startup delivers ethical AI for rural healthcare; these innovators prove that Silicon Valley doesn’t monopolize ethical AI leadership .

While progress continues, seeing Indian innovators prioritize ethics while building world-class AI inspires confidence in our trajectory towards responsible innovation.

Arjun Rao, Btech CS 2023

EthicalAI Healthcare Solutions Founder

“The bias detection frameworks I learned through Gateway’s programs became the foundation of my startup. We now help rural hospitals implement fair AI systems. Gateway didn’t just teach theory—they showed us how to create real impact.”

Achievements at Gateway International

A recent conversation with Priya, a Chennai computer science student who secured admission to Carnegie Mellon, highlighted Gateway International’s impact: Beyond her impressive admission, she is already developing bias detection algorithms for healthcare AI—a direct result of Gateway’s ethics-first educational approach .

Gateway students achieve more than prestigious admissions; they actively reshape AI development practices . Their Indo-UK Tech Alliance partnership enabled 47 Indian students to develop fairness testing frameworks now used by actual startups , which represents real-world impact, not theoretical exercises .

Compelling statistics support this success. Since introducing AI ethics modules in 2023, Gateway students increasingly secure positions at companies prioritizing responsible AI development . Graduates join Microsoft’s Fairness team and Google’s AI Ethics division — roles that barely existed five years ago .

The “bias hackathons” of Gateway particularly impress me: Students detect algorithmic discrimination in loan approval systems and hiring platforms. One team discovered gender bias in a major Indian recruitment tool — their solution now forms part of the company’s core product .

These achievements transcend resume building. Alumni launch startups focused on inclusive AI. Three recent Gateway graduates raised $2M for their bias detection platform that helps companies audit ML models .

While not every student becomes an AI ethics champion, Gateway creates a pipeline of technologists who understand that powerful AI requires responsible development. That’s not just admirable in today’s world—it’s essential.

Latest industry breakthroughs in AI ethics

The AI ethics landscape evolves rapidly, with groundbreaking developments emerging weekly . MIT’s new bias detection framework, discovered during recent client research, challenged my understanding of fairness metrics .

Current momentum shifting developments include:

Breakthrough Detection Tools:

- Google’s Model Cards++: Tracks the bias evolution across model updates , addressing the persistent “set it and forget it” problem

- IBM’s AI Fairness 360 v2.0: Provides real-time bias monitoring, catching issues before deployment rather than after hiring decisions .

- Microsoft’s Responsible AI DashboardIntegrates seamlessly with Azure ML, making ethics checks as routine as unit tests .

Game-changing Methodologies

Stanford’s “pre-training intervention” approach represents a paradigm shift from reactive to proactive bias detection by addressing bias during data curation—before model training begins—this method fixes ingredients rather than salvaging burned cakes .

Industry trends redefining the field

- Regulatory pressureThe EU AI Act forces companies to integrate ethics into development pipelines .

- Cross-functional ethics teamsMajor tech firms embed ethicists directly into ML teams as standard practice .

- Open-source collaborationPremium bias detection tools become freely accessible , democratizing ethical AI

Job postings that require “AI ethics expertise” alongside traditional ML skills signal meaningful progress beyond “ethics as an afterthought” approaches .

The critical question remains: Does our progress match AI’s deployment velocity?

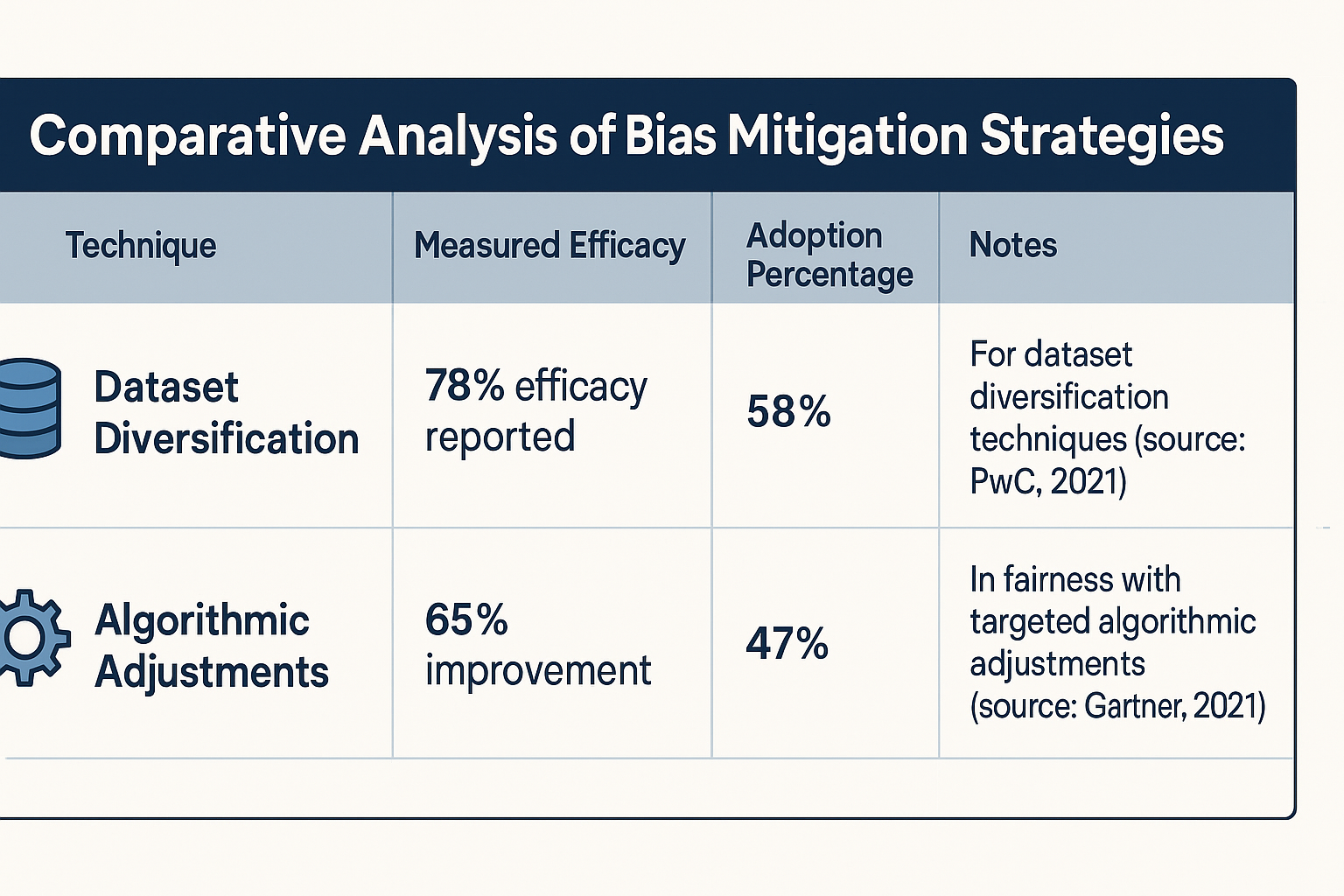

Side-by-side comparison of approaches and their efficacy in mitigating AI bias

Societal stakes: Why Fighting AI Bias Matters

Witnessing my neighbor’s automated loan rejection despite stellar credit crystallized the human cost of AI bias: Her zip code’s historical default rates triggered the rejection—revealing how algorithms impact real livelihoods .

Biased AI’s ripple effects extend far beyond individual frustrations. Recruitment algorithms favoring certain demographics deny economic opportunities to entire communities. Healthcare AI misdiagnosing conditions in underrepresented populations creates life-threatening situations through flawed systems .

The scale terrifies me the most: These aren’t isolated incidents but systematic patterns affecting millions of people. AI systems now determine hiring, loan approvals, law enforcement flags and educational opportunities. Scholarship matching tools trained on historically biased data perpetuate identical inequalities .

The economic implications are staggering: McKinsey estimates that AI bias costs the U.S. economy billions annually through missed talent and innovation .[3]However, human costs are the most important: AI systems consistently disadvantaging certain communities essentially introduce code discrimination into society’s fabric .

We stand at a crossroads: we can build AI systems that amplify existing inequalities or create technology promoting genuine fairness. Additionally, today’s choices determine whether future generations face merit-based opportunities or algorithmic discrimination .

Fighting AI bias transcends code fixes—it’s about defining our collective future .

Action steps for students

Reading about AI bias can feel overwhelming—like watching an unstoppable train wreck while questioning your individual impact . But Indian students possess more power than they realize .

Start with manageable, strategic steps. Join online communities focused on ethical AI—AI4ALL or LinkedIn groups where Indian students discuss responsible tech. Discovering these groups during personal research revealed gold mines of knowledge. IIT students share research papers while tier-2 college students build bias detection tools—truly inspiring collaboration .

Actionable steps that you can implement today:

Build your foundationEnroll in free AI ethics courses (Coursera offers excellent options ) .

Document everything: Create blogs or GitHub repositories documenting bias examples . A simple dataset of biased AI outputs in Indian languages achieved viral academic recognition .

Leverage existing supportThe AI tools of Gateway International include bias detection features . Analyze your projects using these resources . Their free counseling sessions often explore ethical tech career paths .

Connect locallyEstablish college study groups focused on responsible AI : Three people discussing research papers over Chai can spark movements .

The crucial step? Furthermore, stop awaiting permission – whether you build regional language bias detection tools or highlight problematic AI implementations on social media – your voice carries weight .

Every prominent tech ethics advocate began exactly where you are—concerned, slightly confused, but ready to act .

MS Data Science 2025 Anjali Patel

Research assistant, Stanford AI Lab

“Gateway’s bias hackathons changed my perspective on AI development. The hands-on experience detecting real biases in production systems gave me the confidence to pursue research at Stanford and now I’m working on fairness metrics for multilingual AI systems.”

Are you ready to detect AI biases?

Test your knowledge about AI bias and its real-world implications Learn how to identify potential biases in AI systems and understand their impact on society, business and ethical decision-making .

Question :1 3

Which scenario best represents AI bias in recruitment ?

Conclusion: Lighting the path forward

Our journey through the murky waters of AI bias concludes here – it’s been quite a rollercoaster ride .

A tech conference moment remains etched in my memory—a speaker casually mentioned that their company’s AI hiring tool had discriminated against women for three years before detection. The room fell silent. That moment perfectly encapsulated our discussion—these biases aren’t obvious villains but subtle patterns embedded in our data.

Hope emerges from these conversations happening now. We are questioning beyond building faster AI tools: Who gets excluded? Which voices lack representation in training data? How do we create universally beneficial systems?

Unchecked AI bias represents both technical and human challenges: everyone—developers, business leaders and AI users—possesses the power to demand improvement.

Education becomes crucial here: understanding bias infiltration marks the first step towards building fairer systems. Gateway International’s AI ethics workshops excite me because they provide practical bias-spotting tools and inclusive system-building strategies .

Consider this: every biased algorithm began with good intentions ; the distinction between harmful and helpful AI often depends on awareness, education and commitment to improvement .

Your next move matters: Will you explore these issues deeper? Question daily AI interactions? The path forward requires tough questions and ethical demands .

Together we are writing AI’s future through conscious decisions .

Master AI ethics & build responsible technology

Join Gateway International’s workshops on AI ethics and learn how to identify and address biases in AI systems . Become a leader in responsible tech development !

Enroll NowFrequently Asked Questions

References .

- Obermeyer, Z., Powers, B., Vogeli, C. & Mullainathan, S. (2019 ) Dissecting racial bias in an algorithm used to manage the health of populations, Science, 366(6464), 447-453

- Angwin, J., Larson, J., Mattu, S. and Kirchner, L. (2016).

- McKinsey Global Institute. (2020). Tackling bias in artificial intelligence (and humans). McKinsey & Company

By

By

Join the discussion!

Have you encountered AI bias in your work or studies? Share your experiences and insights below, your perspective could help others navigate these challenges .