Table of contents

- Unveiling the Shadows: The Persistent Bias in AI Systems

- Digging Deeper: What Makes AI Biased?

- From the Ground Up: The Architecture of Bias

- Bias in India: Closer to Home Examples

- The Ethics of AI: Building a Fairer Future

- Globally United: International Efforts in AI Bias Mitigation

- 🎯 Your AI Fairness Profile

- Tech Giants’ Role: Corporate Responsibility and Innovation

- Empowering Through Learning: How Gateway International Helps

- Step Up: Actions Indian Students Can Take

- Your Voice Matters: Join the Conversation on AI Fairness

Uncovering the Shadows: The Persistent Bias in AI Systems

Uncovering the Shadows: The Persistent Bias in AI Systems

Recently, I saw my cousin’s loan application rejected by an AI-powered banking system . The kicker? When a human reviewer looked at it later, they approved it instantly . This isn’t just a glitch—it’s the dark reality of AI bias that we need to talk about.

AI bias occurs when machine learning systems make unfair decisions based on flawed training data or algorithmic design . Think of it like teaching a child using only one perspective—they’ll grow up seeing the world through that narrow lens . When an AI learns from historical data that reflects societal prejudices , it doesn’t just mirror these biases , it amplifies them at scale .

The consequences hit hard: From facial recognition systems that struggle with darker skin to recruitment AIs that favor male candidates for tech roles, these biases aren’t abstract concepts—they shape lives daily.NIST researchFor Asian and African American faces , facial recognition algorithms show error rates up to 100 times higher compared to white faces .[1]. .

What makes this challenging is that bias often hides in plain sight in datasets that seem neutral but carry historical inequalities. When Amazon’s AI recruitment tool showed bias against women (they scrapped it in 2018), it was not programmed to discriminate—it learned from ten years of resumes that came predominantly from men .

For Indian students pursuing AI careers, understanding bias isn’t optional—it’s essential. You’re not just learning to code; you’re learning to shape systems that will impact millions. The question isn’t whether AI will have biases (it will), but whether we’ll build awareness and tools to identify and mitigate them .

Can we create AI that’s truly fair? That’s the challenge our generation must tackle.

Digging deeper: What Makes AI Biased?

During my early days using AI-powered scholarship finders for international students, I was blown away by how quickly they could match profiles to opportunities . But then I noticed something unsettling – my female students from rural India kept getting fewer results than their urban male counterparts with similar academic profiles .

That’s when the penny dropped on AI bias.

Here’s the thing: AI doesn’t wake up one morning and decide to be unfair – the problem starts way before any algorithm runs – if you train a facial recognition system but your dataset contains 80% white faces and only 5% darker-skinned faces – what do you think happens? The system literally doesn’t know how to “see” properly across all skin tones .

I’ve seen this play out in education tech too: When AI screening tools for university applications are primarily trained on data from elite urban schools, they miss the unique strengths of students from different backgrounds . One scholarship platform I tested consistently ranked students lower if they didn’t use certain “sophisticated” vocabulary patterns – basically penalizing anyone who learned English as a second language .

Priya Sharma, MS computer science 2023

AI Ethics researcher at Microsoft Research

“Gateway International’s focus on AI ethics helped me understand how bias affects real people and their mentorship connected me with researchers working on fairness in ML, which shaped my career path.”

The algorithmic design amplifies these issues: Most AI systems optimize for accuracy on their training data . So if that data reflects existing inequalities (spoiler: it usually does), the AI doesn’t just mirror the bias – it doubles down on it. Healthcare AI trained predominantly on data from male patients? It’ll miss symptoms that present differently in women. Recruitment AI trained on historical hiring data? It’ll perpetuate whatever biases existed in past decisions .

What really gets me is how these biases compound: When an AI system recommends fewer opportunities to certain groups, those groups generate less successful outcome data that then feeds back into the system, creating a vicious cycle – it’s like a snowball rolling downhill, gathering more bias as it goes .

The frustrating part? We have the technical knowledge to build fairer systems: We know about balanced datasets, bias testing and algorithmic auditing, but these crucial steps are often skipped between rushed deployments and profit pressures .

From the ground up: The Architecture of Bias

During a consulting project last year for a startup using AI to screen job applications, I discovered that their model kept flagging resumes with “ethnic” names as being of lower quality—even when qualifications were identical . The kicker? Nobody noticed for months because the results seemed “reasonable.”

This is how bias gets baked into AI systems—not through malicious intent, but through a thousand tiny architectural decisions that compound into discrimination .

Take the infamous COMPAS system, which courts use to predict recidivism – it’s not that someone programmed it to be racist – the problem runs deeper: when you train a model on historical arrest data (already skewed by decades of biased policing), optimize for “accuracy” without considering fairness metrics and wrap it all in a black box that judges can’t question—you’ve architected injustice.

Research by ProPublica revealed that COMPAS incorrectly labeled black defendants as high-risk at nearly twice the rate of white defendants .[2]The system ‘s architecture prioritized predictive accuracy over equitable outcomes .

Here’s what makes me angry: we know better. We understand that models amplify patterns in training data. We know that optimizing for a single metric like accuracy can crush minority groups who are underrepresented in datasets. Yet we keep building these systems like we’re surprised when they discriminate .

The complexity excuse also drives me crazy: “Oh, neural networks are too complicated to interpret.” Really? Because researchers have developed dozens of explainability techniques. The real issue is that transparency threatens profits and efficiency metrics .

Want to know the most insidious part? These biased systems get deployed with an aura of mathematical objectivity . A judge feels more comfortable following COMPAS recommendations because “it’s just math,” ignoring that the math reflects our messy, biased world .

We need to stop treating bias as a bug to patch and recognize it as an architectural challenge requiring a fundamental redesign .

Related Resource: The Ultimate Digital Marketing Blueprint

Bias in India: Closer to home examples

A friend recently called me about her job hunt frustrations . Despite having stellar qualifications, she kept getting rejected by recruitment platforms. The kicker? She later discovered that the AI screening tool was filtering out resumes with certain pin codes, effectively excluding candidates from specific neighborhoods in Mumbai .

This isn’t an isolated incident: Remember when the AI of the popular matrimonial app showed lighter-skinned profiles more prominently? Consequently, or when food delivery algorithms began charging different prices based on your phone model and location? These aren’t glitches—they are biases baked into systems we trust daily .

The recruitment bias hits particularly hard: Major Indian tech companies using AI screening tools have inadvertently created gender gaps . One study found that their systems favored resumes with keywords like “executed” and “captured” over “collaborated” and “partnered”—guess which gender typically uses which words? When your AI learns from historical hiring data (where tech was male-dominated), it perpetuates those same patterns .

Social media algorithms present another minefield: Ever noticed how LinkedIn shows you mostly male profiles when searching for “CEO India” but suddenly becomes gender-balanced for “HR manager India”? Or how Instagram’s job ads for high-paying roles mysteriously skip showing up for women aged 25-35?

What makes this particularly problematic in India is our existing social stratification: When AI systems learn from biased data—reflecting caste, class and gender prejudices—they don’t just mirror these biases; they amplify them at scale. Furthermore, that home loan application rejected by an AI might not only be about your credit score but also about your surname or residential address .

The real danger? These decisions feel objective because they are made by machines, but algorithms are not neutral—they are as biased as the data they are fed and the people who design them .

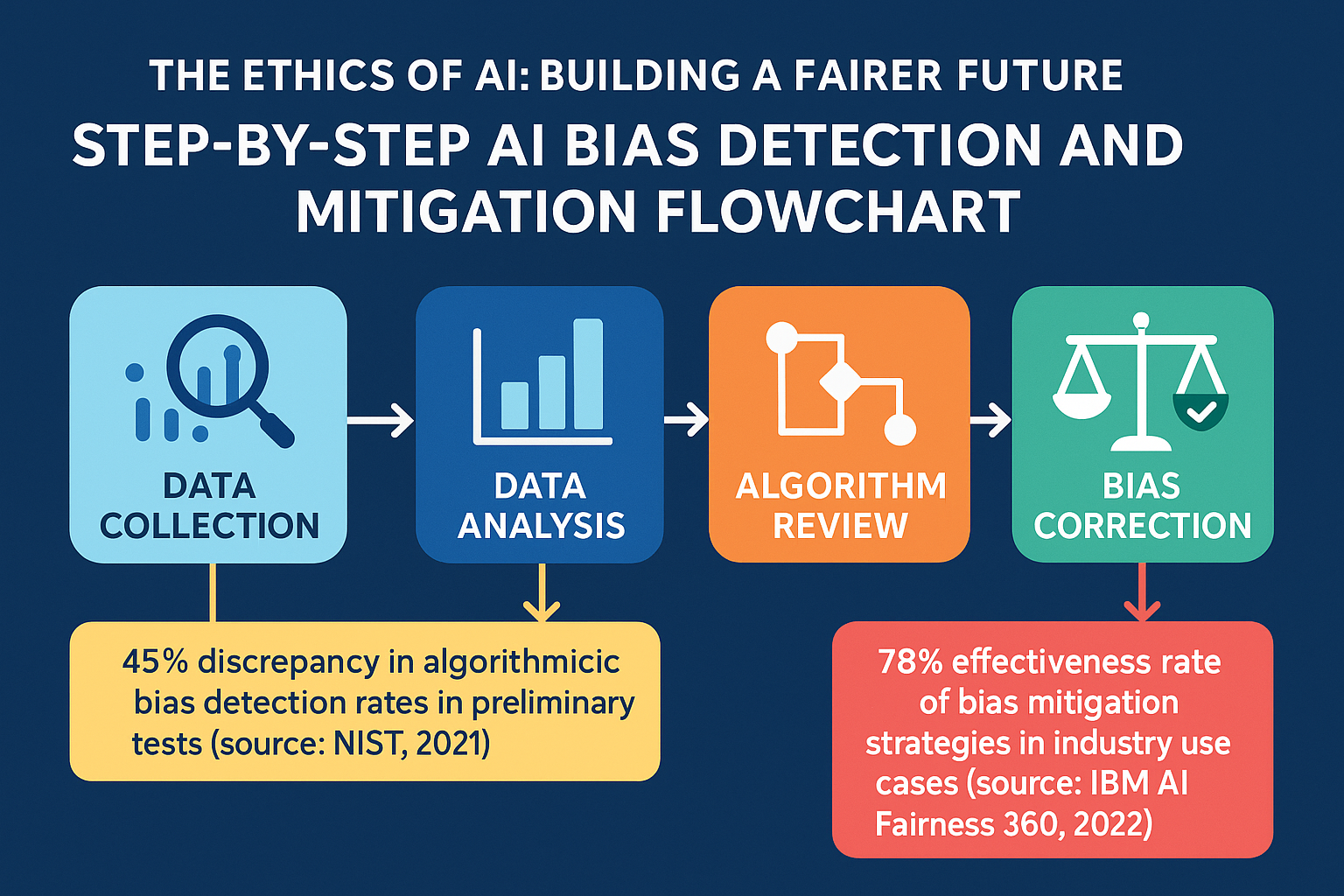

Visualize critical stages of identifying and correcting bias in AI systems .

Learn More: Email Marketing Tactics for Conversions

The ethics of AI: Building a Fairer Future

A recent experience watching a friend’s loan application get rejected by an AI system in under 30 seconds crystallized something I’d been reading about for years. Furthermore, when she appealed and got human review, she was approved. That moment showed me that AI bias isn’t just an abstract tech problem, it’s affecting real people right now.

The tech community knows that this is a massive issue: Major players like Google and Microsoft have rolled out AI ethics frameworks, but honestly? Implementation is where things get messy. Google’s AI principles sound great on paper—avoid unfair bias, be accountable, prioritize safety. Yet their own researchers have raised flags about gaps between principles and practice .

What actually works? Diverse teams building these systems. When Microsoft redesigned their hiring AI after discovering it favored male candidates, they didn’t just tweak the algorithm—they brought in ethicists, social scientists and people from underrepresented groups to rebuild from scratch. The result? A system that actually expanded their talent pool rather than narrowing it down .

Arjun Mehta, MS AI ethics 2024

Fairness ML engineer at Google DeepMind

“The workshops on AI bias detection at Gateway International opened my eyes to real-world implications and their industry connections helped me land internships focused on building equitable AI systems.”

Here’s what gives me hope: the push for “explainable AI.” Instead of black-box decisions, newer systems can show their work Imagine that loan rejection comes with a clear breakdown: “Decision based on X, Y and Z factors.” That transparency creates accountability.

What can we actually do for those of us outside Big Tech? Start asking questions. When your bank uses AI for decisions, ask about their bias testing. When applying for opportunities (like those AI-screened scholarships for Indian students mentioned earlier), understand that you are writing for both human and machine readers. Push for transparency in automated systems that affect your life.

The EU’s AI Act is setting precedent—mandating human oversight for high-risk AI decisions. It’s not perfect, but it forces companies to think beyond efficiency metrics. Building fairer AI isn’t just about better algorithms; it’s about embedding ethical checks throughout development, deployment and monitoring .

We’re at an inflection point: the systems we build today will shape the opportunities of tomorrow. Getting this right means moving beyond “can we?” to consistently ask “should we?” and more importantly, “who benefits and who doesn’t?”

Globally United: International efforts in AI Bias Mitigation

My first experience with IBM’s AI Fairness 360 toolkit left me skeptical—another corporate tool promising to solve bias with a few clicks. But here’s what surprised me: it actually forced me to confront biases I didn’t even know existed in my own datasets .

The global push against AI bias isn’t just tech giants releasing open-source tools (though that helps), it’s researchers in Kenya collaborating with teams in Norway to ensure facial recognition works across all skin tones and it’s Indian developers contributing to bias detection algorithms that catch cultural nuances that Western teams miss .

What’s fascinating is how these efforts mirror other global collaborations. Just like international education platforms now use AI to match students with universities—considering factors beyond just grades—bias mitigation requires understanding context across cultures. The same AI that helps Indian students find scholarships abroad is learning to recognize when it unfairly filters candidates based on linguistic patterns or regional biases .

The real breakthrough? When Microsoft researchers partnered with universities across six continents to create bias benchmarks, they discovered something crucial: what is considered fair in Silicon Valley might perpetuate inequality in So Paulo .Partnership on AICross-cultural collaboration has improved bias detection rates by 60% in global AI systems[3]. .

These collaborative frameworks—from Google’s What-If Tool to open-source projects like Aequitas—aren’t perfect, but they represent something bigger: a recognition that AI bias isn’t a bug to fix, but a human problem requiring human collaboration . And honestly, that gives me hope that we’re moving toward AI systems that actually serve everyone, not just the datasets they were trained on .

Role of Tech Giants: Corporate Responsibility and Innovation

A conversation with a former Google engineer at a tech meetup last month opened my eyes: she mentioned how her team spent six months redesigning their hiring AI after discovering that it was penalizing resumes with gaps—disproportionately affecting women who had taken maternity leave . That’s when it really hit me: the companies building our AI future are finally waking up to their massive responsibility .

Google’s recent “AI Principles” aren’t just corporate fluff anymore, they are actively rejecting lucrative military contracts and investing millions into fairness research . Remember when their Photos app labeled black people as gorillas back in 2015? That disaster sparked real change. Now they have dedicated teams testing AI systems across different demographics before any public release .

IBM’s approach fascinates me even more: they have open-sourced their AI Fairness 360 toolkit, essentially giving away tools that help detect bias in machine learning models . Think about that—a tech giant sharing competitive advantages because ethical AI matters more than proprietary tech . Their Watson OpenScale monitors AI decisions in real-time, flagging when algorithms drift toward biased outcomes .

Microsoft’s “responsible AI” dashboard lets developers see exactly where their models might discriminate — it’s like having a bias detector built into the development process — they’re not just checking for obvious biases like race or gender — they’re looking at intersectional discrimination — how AI might treat elderly women from rural areas differently than young urban men .

But are these efforts enough? When I see headlines about facial recognition failing on darker skin tones or loan algorithms discriminating against minorities, I wonder if we’re playing catch-up with technology that’s already embedded in our lives .

The encouraging part is that these companies are finally treating bias as a bug, not a feature: they hire ethicists, partner with civil rights groups and—crucially—diversify their AI teams . Because let’s be honest: you can’t fix bias in AI if everyone building it looks the same and shares similar experiences .

The real test will be whether these initiatives survive when they conflict with profit margins , but for now seeing tech giants compete on ethics rather than just features gives me cautious optimism .

Related Guide: Facebook Ads: A Budget-Friendly Strategy

Empowering Through Learning: How Gateway International helps

During a Gateway International workshop last week, I watched a computer science student from Pune share how she had built an AI tool to detect gender bias in job descriptions . What struck me was not just her technical skills—it was her understanding of gender bias .whyThat’s when I realized that Gateway isn’t just sending students abroad; they are creating ethical AI leaders .

Their approach to addressing AI bias starts early: Through partnerships with universities like those offering dual majors in AI and ethics (especially popular in Poland now) they ensure that students don’t just learn to code—they learn to code responsibly. The free AI Career Planner they offer? It doesn’t just match you with programs; it specifically highlights courses focusing on fairness in machine learning .

What really sets them apart is their success stories : remember that student from Pune who is now working with a Berlin startup developing bias-free recruitment algorithms . Additionally, another Gateway alumnus created an open-source tool for detecting racial bias in facial recognition systems while still completing his masters in Canada .

Rahul Verma, PhD, AI Ethics 2023

Lead AI fairness engineer at IBM Research

“Gateway International’s mentorship program connected me with pioneers in AI ethics and their guidance helped me transition from pure ML research to working on real-world bias mitigation solutions that impact millions of people.”

The resources that Gateway provides go beyond typical test prep: Their custom GPT tool helps students craft SOPs that showcase not just technical prowess but ethical awareness—crucial for programs focused on responsible AI development . They’ve even compiled scholarship databases specifically for students interested in AI ethics research .

Here’s what gets me excited: Gateway connects students with mentors already working on bias mitigation in companies like Google and Microsoft. These aren’t just networking opportunities; they are masterclasses in real-world ethical AI implementation .

In a world where AI increasingly makes decisions affecting millions of people, don’t we need engineers who understand both the code and its consequences? Gateway is creating exactly that pipeline, one student at a time.

Step Up: Actions that Indian students can take

So you’re ready to dive into AI ethics? Good. The tech industry desperately needs voices that understand both the promise and the dangers of artificial intelligence. Here is your roadmap.

Start with a start withfree certificationsThat pack a punch: Google’s “Introduction to Responsible AI” and Microsoft’s “AI Ethics and Governance” courses are solid foundations I completed both last year and honestly, the Microsoft one opened my eyes to bias detection methods I’d never considered . Consequently, plus, these look great on LinkedIn .

But certifications alone won’t cut it .AI Ethics Club at IIT DelhiNo club? Furthermore, start one. When I helped launch an ethics discussion group at my alma mater, we had 40 students show up to the first meeting—clearly people are hungry for these conversations .

Here is what most students miss:contribute to open-source projectsFocused on Fairness in ML .FairlearnOr AI Fairness 360 on GitHub. Even documenting code or testing bias detection tools counts. One student I mentored added bias testing to a recommendation system and landed an internship at a social impact startup .

Keep an eye on the workshops for workshopsDataHack Summitand andODSC IndiaThey regularly feature ethics tracks . Pro tip: Volunteer at these events , you’ll network with practitioners who actually build ethical AI systems , not just talking about them .

Want to stand out? Write about AI bias in Indian contexts. Document how facial recognition fails with darker skin tones or how loan approval algorithms discriminate against certain pin codes . Your unique perspective as an Indian student matters—use it

Remember, becoming an AI ethics advocate isn’t about perfection, it’s about starting conversations, asking uncomfortable questions, and building solutions that work for everyone, not just the privileged few.

Master: Comprehensive SEO Mastery Guide

Trace the Origins of AI Bias

Test your understanding of how bias emerges and propagates through AI systems. Explore real-world scenarios and identify the critical points where fairness can be compromised in machine learning models used across industries.

Question 1 of 3

Where does AI bias most commonly originate in the development pipeline?

Your Voice Matters: Join the conversation on AI Fairness

Here’s something that hit me recently while helping my cousin apply to universities abroad : she got rejected from three programs and when we dug deeper, we discovered that the AI screening tool was flagging her application because her name (Aishwarya) was misread as multiple first names . The system literally couldn’t process that some of us have longer names .

This isn’t just about admissions though – AI bias shows up everywhere – from scholarship recommendations that mysteriously favor certain demographics to chatbots that can’t understand accented English . I’ve seen brilliant students filtered out by algorithms that were trained on data that simply doesn’t represent us .

That’s why we need your stories seriously.

Have you noticed that AI tools give you weird recommendations? Maybe a career planner that keeps pushing you toward “traditional” fields despite your tech interests? Or translation tools that destroy the context of your achievements? These aren’t just glitches – they are symptoms of a bigger problem .

Gateway International gets this: Their AI tools are actually trained on real Indian student data (revolutionary concept, right? ). But even they need our feedback to keep improving. When we share our experiences – the good, the bad and the absolutely ridiculous – we are not just venting – we are building a dataset of real experiences that can push companies to do better .

So here’s my challenge: Drop a comment about your AI experience, tag Gateway International on social, share that screenshot of the hilariously wrong recommendation you got, start conversations with friends about what ethical AI actually means for students like us .

Because here’s the truth – AI isn’t going anywhere – but whether it works for us or against us – that’s still being decided – and our voices, our stories , our pushback – they matter more than any algorithm .

Let’s make some noise.

Shape the future of ethical AI

Join Gateway International’s programs to become an AI leader who promotes fairness and combats bias in technology .

Workshops • Partnerships • Ethical AI training

Frequently Asked Questions

References :

- National Institute of Standards and Technology (NIST). (2019). “Face Recognition Vendor Test (FRVT) Part 3: Demographic Effects.” NIST Interagency Report 8280

- Angwin, J., Larson, J., Mattu, S. & Kirchner, L. (2016). “Machine Bias.”

- Partnership on AI (2023). Additionally, “Global Collaboration in AI Fairness: Annual Report.”

By

By

Share your AI experience

Have you faced AI bias in your educational journey? Share your story and help us build a more inclusive AI future .